The Ugly, the Bad and the Good

The Good, the Bad and the Ugly

will AI upend science dissemination?

Kavli day

AI and machine learning

in modern-day science

Den Haag, 5 October 2023

Jean-Sébastien Caux

Plan of the talk

Brief (from Kobus, Gijsje):

To what extent are you, as an editor, worried or excited or both about how chatGPT (or similar program) could affect the dissemination of our scientific results?

We need to talk.

- The Ugly

- The Bad

- The Good

The Ugly →

Instrumental convergence

Generated with StableDiffusionTurbocharged paper mills

Generated with StableDiffusionDeepfakes, Obfuscation & Impersonation

"Write this report in the style of [your academic rival]"

Supercharged fraud...

← The Bad

Copyright

LLMs are guilty of gargantuan-scale copyright infringement...

...but AI-generated stuff can't be copyrighted

Théâtre d’Opéra Spatial, Jason Allen (using Midjourney)

Confusion on editorial guidelines

Lack of clarity/consistency of editorial policies

Should ChatGPT be a coauthor? (plagiarism?)

Difficulty of detecting AI content using traditional plagiarism tools

Lack of honesty (ouch!) on AI use acknowledgements

Laziness, slide towards mediocrity?

Laziness empowered: "just let the machine do it"

Safeguard (?!): "Humans should always do a check"

Have no illusions: this won't happen, humans like to cut it short and systems tend to reward bullshit

Long-term fear: truth drowning, bogus research much more voluminous than correct one

The ↑ Good

Smart harvesters and digesters

Huge opportunities for much-improved, flexible and highly-optimizable apps

Super simple example: IArxiv

Challenge for publishers: introduce next-generation digesters, search

Assessors

Machine being able to assess research works

- prose quality

- logical structure

- cohesion

- completeness

- correctness

- reproducibility

Accelerators / facilitators

- coding

- computing

- labwork

- data handling

- plotting

- writing (hum...)

New careers

harnesser / puppet master / detective

The Magic Sword of Muramasa

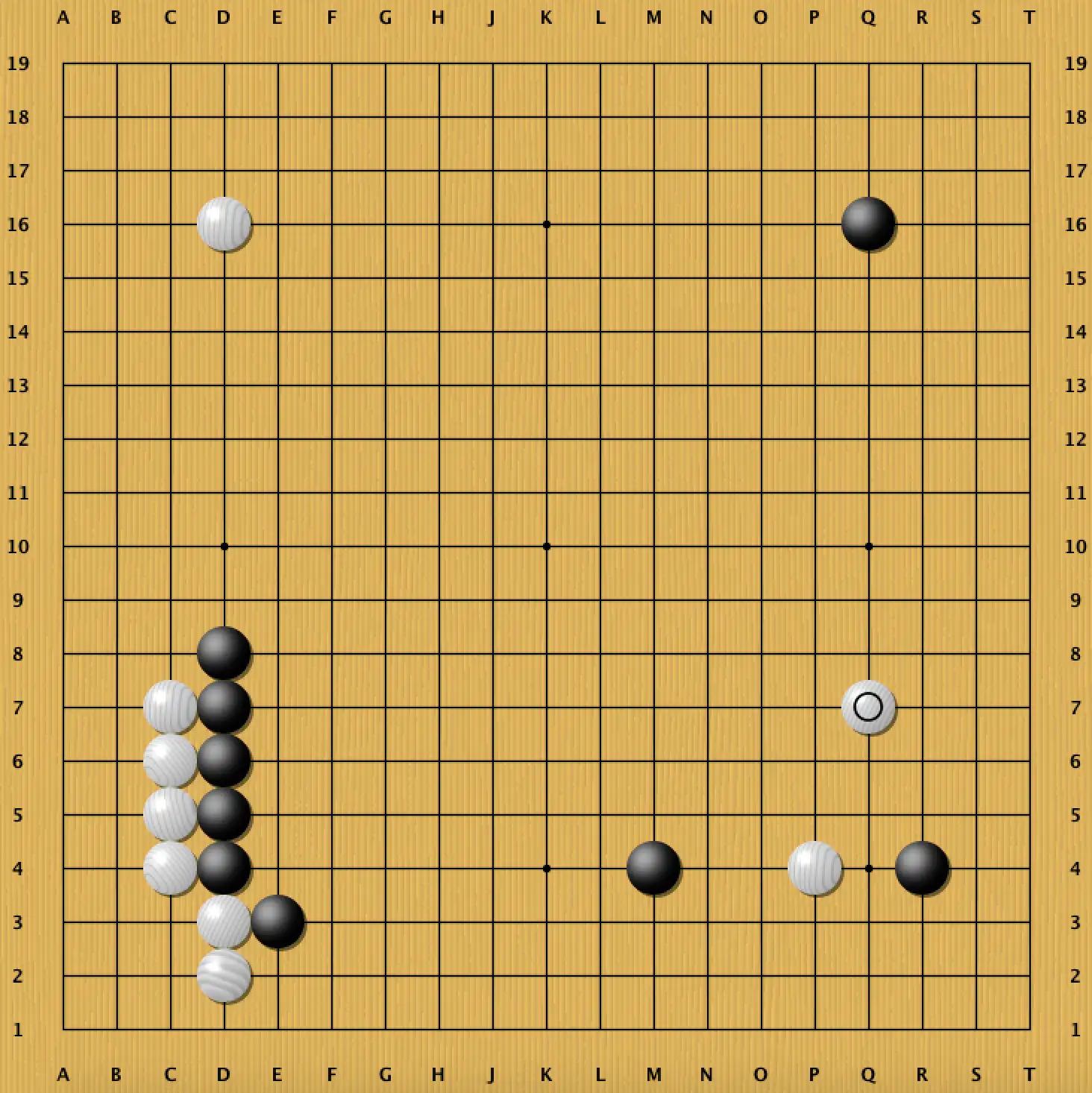

Setup of the joseki

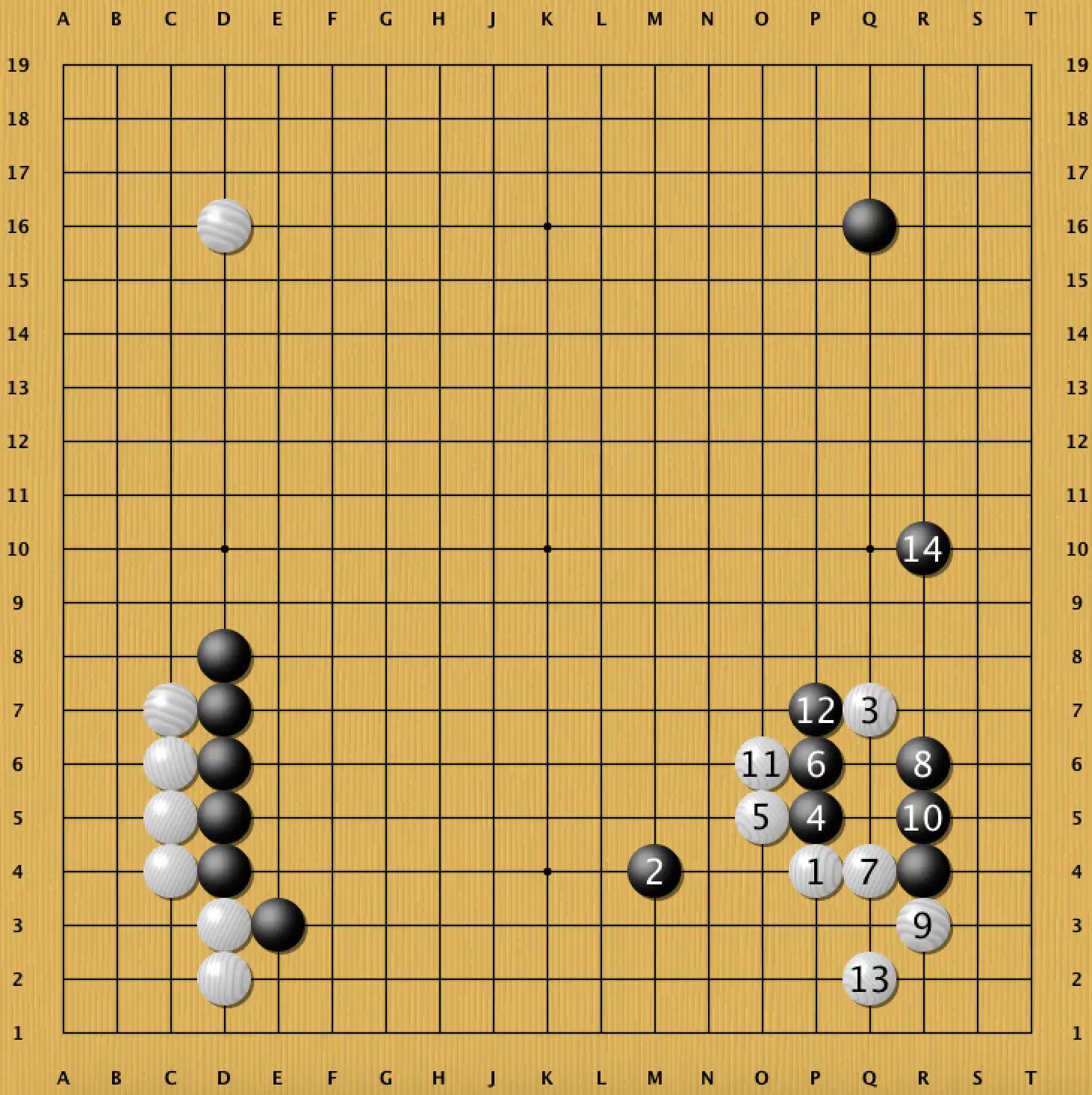

The Magic Sword of Muramasa

The traditional joseki

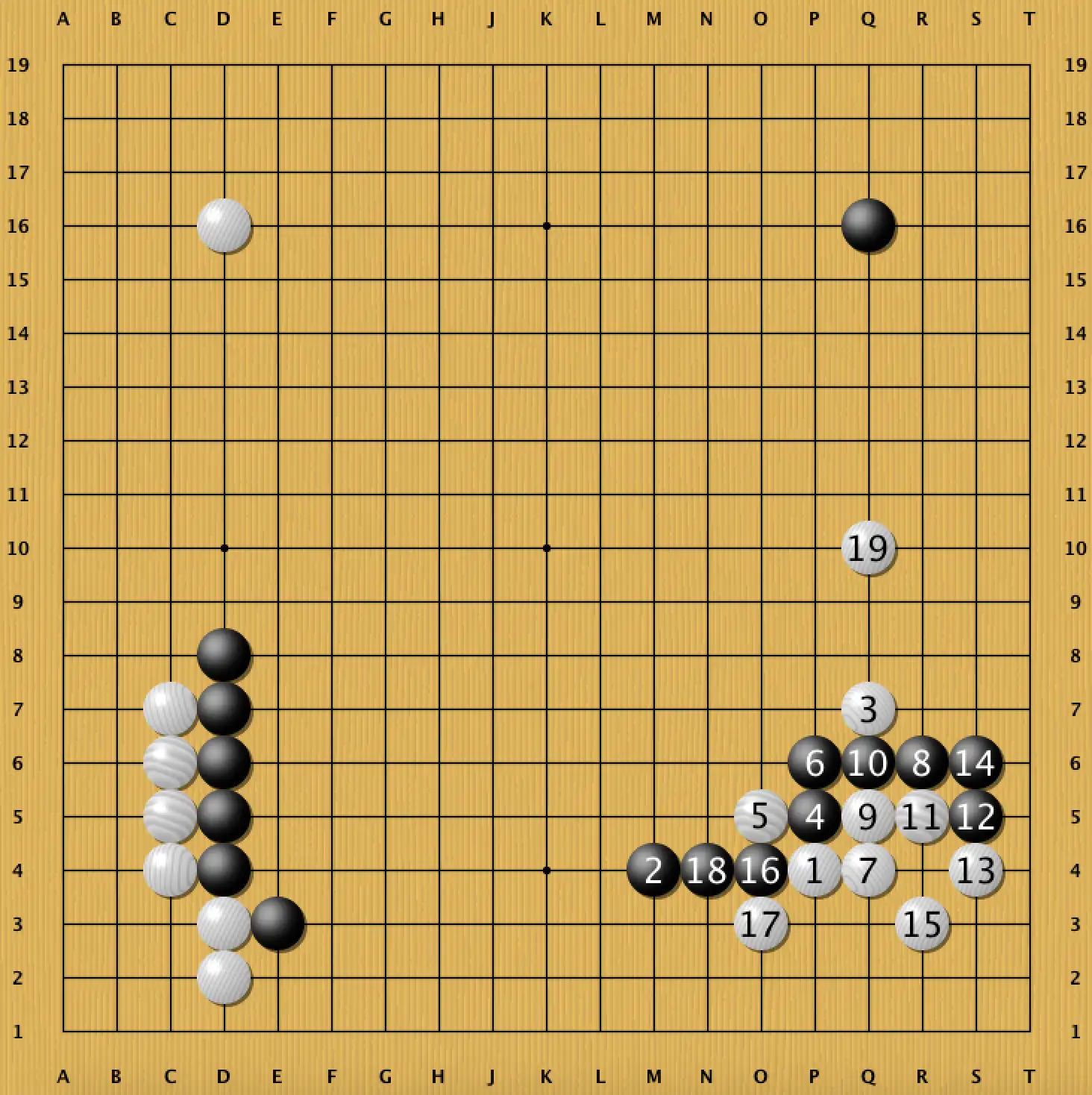

The Magic Sword of Muramasa

AlphaGo's innovation

The question of original thought

Definition of "original":

- not secondary, derivative, or imitative

- independent and creative in thought or action

Go: machine is capable of "original" thought

Often read/heard:

AI can only rehash existing stuff, not invent new

I fundamentally disagree

The question of creativity

Nature, inanimate matter is creative: it invented us

AI is clearly a step above inanimate matter

All the current commotion is not really

about Machine Learning

In the end it's all about human learning,

what future it has,

and whether we give up, adapt or (need to) fight

Personal recommendations

Publishers:

- give clear guidelines

- don't assume they will be followed

- build AI into your search/discovery facilities

- discourage/penalize AI use in authoring

- resolutely fight the use of AI in writing reports

(writing reports is not the same as refereeing, it's only part of it) - innovate, with reason

- try to not f... things up (c.f. OA snafu)

Personal recommendations

Scientists:

- go easy on using AI for authoring (namely: don't)

- go wild on using AI for discovery/digestion

- using AI for writing referee reports?

Don't you dare - work hard to keep up with the tech

- be critical (now more than ever)

- cling to the driving seat

- understand instrumental convergence. Avoid it.